Contact Information

![[Photo]](img/my_photo.jpg)

- Compagny Technicolor Research & Innovation

- Email <first_name>(dot)<name>(at)technicolor(dot)com

-

Address

Technicolor Research & Innovation

1, avenue de Belle Fontaine, CS 17616

35576 Cesson-Sévigné cedex

France

Profile

-

Since 2010

Researcher

Technicolor Research & Innovation, Mixed Reality Project

CGI: Screen-space ambient occlusion, procedural cities generation and rendering,... -

2008-2010

Research Engineer

INRIA Rennes Bretagne Atlantique, Temics Project

3D reconstruction from monocular and multi-view videos, and auto-stereoscopic 3D rendering -

2004-2007

PhD Thesis from Université de Rennes 1

INRIA Rennes Bretagne Atlantique, Temics Project

Reconstruction of urban areas based on GPS, GIS and Video registration -

2003-2004

Master Degree in Computer Science

IFSIC, Université de Rennes 1

Reconstruction of video object models for augmented and virtual reality - Resume Available in french

Triple Depth Culling

-

Abstract

Virtual worlds feature increasing geometric and shading complexities, resulting in a constant need for effective solutions to avoid rendering objects invisible for the viewer. This observation is particularly true in the context of real-time rendering of highly occluded environments such as urban areas, landscapes or indoor scenes. This problem has been intensively researched in the past decades, resulting in numerous optimizations building upon the well-known Z-buffer technique. Among them, extensions of graphics hardware such as early Z-culling efficiently avoid shading most of invisible fragments. However, this technique is not applicable when the fragment shader discards fragments or modifies their depth value, or if alpha testing is enabled.

We introduce Triple Depth culling for fast and controllable perpixel visibility at the fragment shading stage using multiple depth buffers. Based on alternate rendering of object batches, our method effectively avoids the shading of hidden fragments in a single pass, hence reducing the overall rendering costs. Our approach provides an effective control on how culling is performed prior to shading, regardless of potential discard or alpha testing operations. Triple Depth culling is also complementary with the existing culling stages of graphics hardware, making our method easily integrable as an additional stage of the graphics pipeline.

- Location Technicolor Research & Innovation

- Downloads [ Paper ] [ Video (Available soon) ] ACM Siggraph'11

- Links [ View Paper ] [ Video Stream ] [ Book Publisher ] [ Buy Me! ]

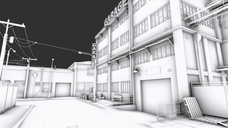

Real-Time Procedural Geometry

-

Abstract

We introduce procedural geometry mapping and ray-dependent grammar development for fast and scalable render-time generation of procedural geometric details on graphics hardware. By leveraging the properties of the widely used split grammars, we replace geometry generation by lazy per-pixel grammar development. This approach drastically reduces the memory costs while implicitly concentrating the computations on objects spanning large areas in image space. Starting with a building footprint, the bounding volume of each facade is projected towards the viewer. For each pixel we lazily develop the grammar describing the facade and intersect the potentially visible split rules and terminal shapes. Further geometric details are added using normal and relief mapping in terminal space. Our approach also supports the computation of per-pixel self shadowing on facades for high visual quality. We demonstrate interactive performance even when generating and tuning large cityscapes comprising thousands of facades. The method is generalized to arbitrary mesh-based shapes to provide full artistic control over the generation of the procedural elements, making it also usable outside the context of urban modeling.

- Location Technicolor Research & Innovation

- Downloads [ Paper ] [ Video (Results) ] GI'11

- Links [ Video Stream ]

Screen Space Ambient Occlusion

-

Abstract

Ambient occlusion improves the realism of synthetic images by accounting for the overall light attenuation due to local occlusions. This technique recently became a popular tool to produce realistic images, either for offline rendering or in interactive applications such as games. For offline rendering, ambient occlusion (AO) is traditionally computed by casting many rays from each visible point and analyzing the amount of rays hitting the geometry in a close vicinity around the considered point. To achieve interactive frame rates, AO is approximated in screen-space, using the depth buffer to represent the scene geometry, and built upon analytical or numerical tricks to replace the ray-traced occluder search.

We present a novel method for computing screen-space ambient occlusion (SSAO) at interactive rates, while preserving the original formulation of the standard ray-traced ambient occlusion. Based on ray-marching on graphics hardware, our method achieves interactive frame rates with physically consistent results, even with few samples. Our SSAO computation is settled within a standard deferred shading framework, making it easily integrable into post-production / previsualization workflows, or in computer games.

- Location Technicolor Research & Innovation

- Downloads [ Paper ] [ Video (Results) ] [ Video (Fast-forward) ] I3D '11

- Links [ View Paper ] [ Video Stream (Results) ]

Depth Maps Estimation for 3DTV Contents

-

Abstract

We explores in this work several depth maps estimation methods, in different video contexts. For standard (monocular) videos of fixed scene and moving camera, we present a technique to extract both the 3D structure of the scene and the camera poses over time. These information are exploited to generate dense depth maps for each image of the video, through optical flow estimation algorithms. We also present a depth maps extraction method for multi-view sequences aiming at generating MVD content for 3DTV. These works are compared to existing approaches used at writing time in the 3DV group of MPEG for normalization purposes. Finally, we demonstrate how such depth maps can be exploited to performrelief auto-stereoscopic rendering, in a dynamic and interactive way, without sacrifying the real-time computation constraint.

- Location INRIA Rennes Bretagne Atlantique

- Downloads [ Technical Report ] [ View Paper ]

- Links [ View Report ]

- Thanks This work could not have been achieved without these guys' work

PhD Thesis - 3D Reconstruction of Urban Areas

Reconstruction of urban areas based on GPS, GIS and Video registration

-

Abstract

This thesis presents a new scheme for 3D buildings reconstruction, using GPS, GIS and Video datasets. The goal is to refine simple and geo-referenced 3D models of buildings, extracted from a GIS database (Geographic Information System). This refinement is performed thanks to a registration between these models and the video. The GPS provides rough information about the camera location.

First, the registration between the video and the 3D models using robust virtual visual servoing is presented. The aim is to find, for each image of the video, the geo-referenced pose of the camera (position and orientation), such as the rendered 3D models projects exactly on the building images in the video.

Next, textures of visible buildings are extracted from the video images. A new algorithm for façade texture fusion based on statistical analysis of the texels color is presented. It allows to remove from the final textures all occluding objects in front of the viewed building façades.

Finally, a preliminary study on façades geometric details extraction is presented. Knowing the pose of the camera for each image of the video, a disparity computation using either graph-cuts or optical flow is performed in texture space. The micro-structures of the viewed façades can then be recovered using these disparity maps.

- Advisors Luce Morin and Kadi Bouatouch

- Location INRIA Rennes Bretagne Atlantique

-

Downloads

[ PDF File ] (in French, 25.4MB)

[ PDF File ] (in French, Low resolution version, 9.4MB)

- Links [ View Manuscript ]

Journals

- Gaël Sourimant and Thomas Colleu and Vincent Jantet and Luce Morin and Kadi Bouatouch link bib Toward automatic GIS-video initial registration. Annals of Telecommunications, Volume 67, Issue 1, pp. 1-13, 2012, Springer Paris.

International Conferences and Workshops

- Jean-Eudes Marvie and Cyprien Buron and Pascal Gautron and Patrice Hirtzlin and Gaël Sourimant pdf bib GPU Shape Grammars. Pacific Graphics 2012, Hong-Kong, People's Republic of China, September, 2012.

- Jean-Eudes Marvie and Pascal Gautron and Gaël Sourimant pdf bib Triple Depth Culling. ACM Siggraph 2011, Vancouver, BC, Canada, September, 2011.

- Jean-Eudes Marvie and Pascal Gautron and Patrice Hirtzlin and Gaël Sourimant pdf bib Render-Time Procedural Per-Pixel Geometry Generation. Graphics Interface 2011, St. John's, Newfoundland, Canada, May, 2011.

- Gaël Sourimant and Pascal Gautron and Jean-Eudes Marvie pdf bib Poisson Disk Ray-Marched Ambient Occlusion. ACM SIGGRAPH Symposium on Interactive 3D Games and Graphics (I3D Posters), San Francisco, USA, February, 2011.

- Gaël Sourimant pdf bib A simple and efficient way to compute depth maps for multi-view videos. 3DTV'Conference - The True Vision - Capture, Transmission and Display of 3D Video (3DTV-CON), Tampere, Finland, June, 2010.

- Thomas Colleu and Gaël Sourimant and Luce Morin pdf bib Automatic Initialization for the Registration of GIS and Video Data. 3DTV'Conference - The True Vision - Capture, Transmission and Display of 3D Video (3DTV-CON), pp. 49-52, Istambul, Turkey, May, 2008.

- Gaël Sourimant and Luce Morin and Kadi Bouatouch pdf bib Gps, Gis and Video Registration for Building Reconstruction. ICIP 2007, 14th IEEE International Conference on Image Processing, San Antonio, Texas, USA, September, 2007. The original publication is available at www.springerlink.com

- Gaël Sourimant and Luce Morin and Kadi Bouatouch pdf bib Gps, Gis and Video fusion for urban modeling. CGI 2007, 25th Computer Graphics International Conference, Petropolis, RJ, Brazil, May, 2007.

- Gaël Sourimant and Luce Morin pdf bib Model-Based Video Coding with Generic 2D and 3D Models. Proceedings of Mirage 2005 (Computer Vision / Computer Graphics Collaboration Techniques and Applications), INRIA Rocquencourt, France, March, 2005.

National Conferences / French speaking international conferences

- Gaël Sourimant and Thomas Colleu and Vincent Jantet and Luce Morin pdf bib Recalage GPS / SIG / Video, et synthèse de textures de bâtiments. COmpression et REpresentation des Signaux Visuels (CORESA 2009), Toulouse, France, March, 2009. Prize: Best paper award

- Thomas Colleu and Gaël Sourimant and Luce Morin pdf bib Une méthode d'initialisation automatique pour le recalage de données SIG et vidéo. COmpression et REpresentation des Signaux Visuels (CORESA 2007), Montpellier, France, November, 2007.

- Gaël Sourimant and Luce Morin and Kadi Bouatouch pdf bib Vers une reconstruction 3D de zones urbaines : mise en correspondance de données Gps, Sig et Vidéo. GRETSI 2007, 21e Colloque en Traitement du Signal et des Images, Troyes, France, September, 2007.

- Gaël Sourimant and Luce Morin and Kadi Bouatouch pdf bib Fusion de données Gps, Sig et Vidéo pour la reconstruction d'environnements urbains. Orasis 2007, onzième congrès francophone des jeunes chercheurs en vision par ordinateur, Obernai, France, June, 2007.

- Gaël Sourimant and Luce Morin pdf bib Codage vidéo basé sur des modèles génériques 2D et 3D. Orasis 2005, dixième congrès francophone des jeunes chercheurs en vision par ordinateur, Fournols, France, May, 2005.

Technical Reports

- Gaël Sourimant pdf bib Depth maps estimation and use for 3DTV. Inria Technical Report, no. 0379, Rennes, France, February, 2010.

Thesis

- Gaël Sourimant pdf bib Reconstruction de scènes urbaines à l'aide de fusion de données de type GPS, SIG et Video. PhD. thesis, Thèse de doctorat en Informatique, Université de Rennes 1, France, December, 2007.

Books and Book Chapters

- Pascal Gautron and Jean-Eudes Marvie and Gaël Sourimant link bib Z3 Culling. GPU Pro 3: Advanced Rendering Techniques, A K Peters/CRC Press, ISBN-13: 978-1439887820, February, 2012.

- Gaël Sourimant link bib Reconstruction 3D de scènes urbaines. Editions Universitaires Européennes, ISBN 978-613-1-55456-8, 2010.